Apple announced that Live Translation will be integrated into Macs, alongside broader upgrades to its Apple Intelligence generative AI capabilities, at the Worldwide Developer Conference on June 9. Apple has historically been cautious about integrating advanced AI features into its products. Instead of aiming for the cutting edge, it has primarily partnered with ChatGPT to deliver now-standard generative AI capabilities on its laptops, phones, and wearable devices.

‘The silence surrounding Siri was deafening’

Meanwhile, Apple SVP of software engineering Craig Federighi said the long-anticipated update to Siri — meant to align with other major generative AI assistants — remains in development.

“We’re continuing our work to deliver the features that make Siri even more personal,” he said at WWDC. “This work needed more time to reach a high-quality bar, and we look forward to sharing more about it in the coming year.”

“The silence surrounding Siri was deafening; the topic was swiftly brushed aside to some indeterminate time next year,” said Forrester’s VP principal analyst Dipanjan Chatterjee in an email to eWeek. “Apple continues to tweak its Apple Intelligence features, but no amount of text corrections or cute emojis can fill the yawning void of an intuitive, interactive AI experience that we know Siri will be capable of when ready.”

Apple introduces visual intelligence, Live Translation, and AI quality of life updates

One upgrade expands the visual intelligence image analysis feature to the entire iPhone screen instead of select apps. Similar to Android’s Circle to Search with Google Gemini, visual intelligence is advertised primarily as a tool for identifying and shopping items seen on social media. Users can select an item and search for similar products within certain retail apps or via Google.

Other enhancements to Apple Intelligence include:

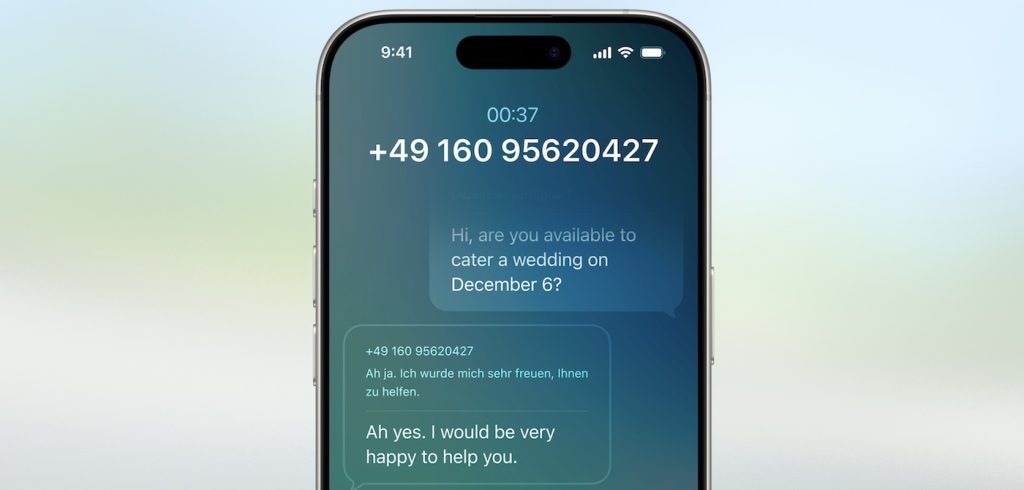

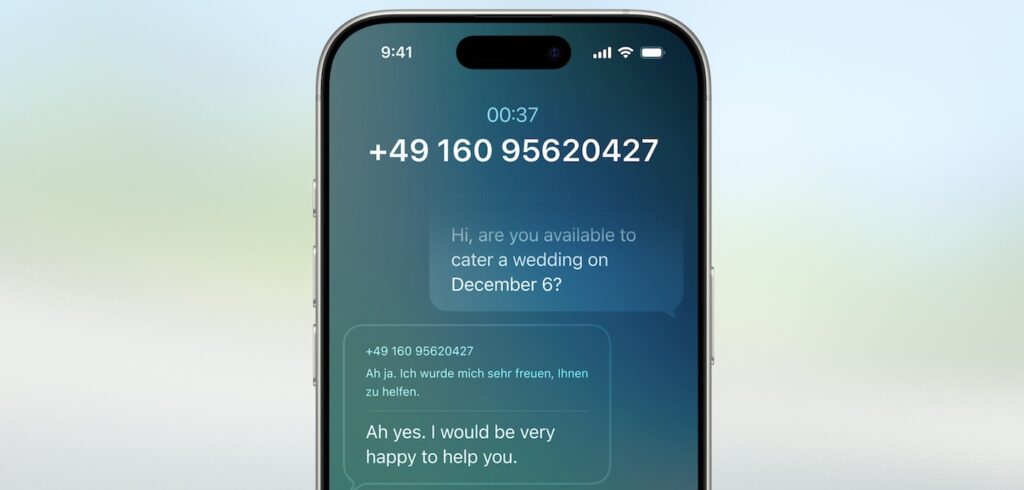

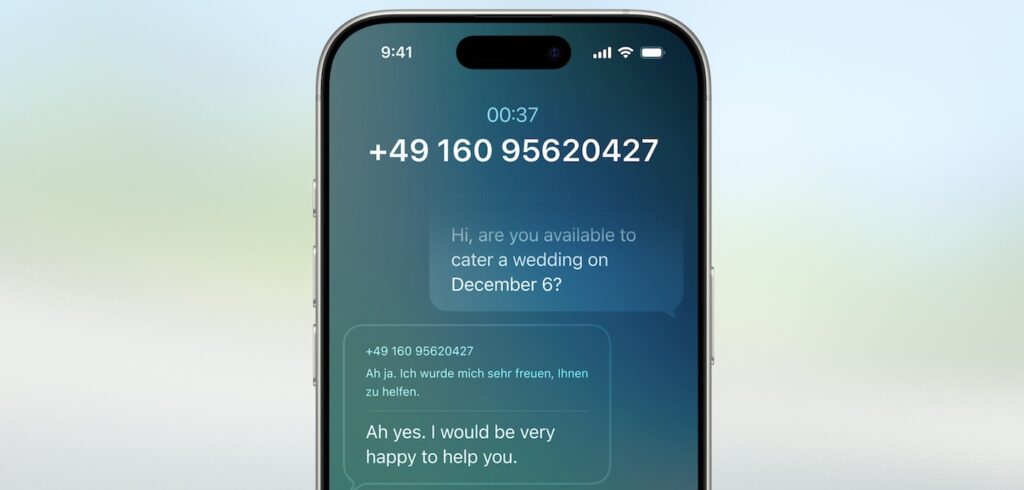

- Live Translation is coming to Messages, FaceTime, and Phone.

- Genmoji and Image Playground can now create more customized images, including combinations of emoji, and images made by ChatGPT.

- Workout Buddy provides custom encouragement and updates on Apple Watch. (It also requires Bluetooth headphones and an iPhone nearby.)

- Shortcuts in macOS will be able to digest natural-language queries, making searching the device more flexible and creating automatic workflows based on the user’s behavior.

“Now, the models that power Apple Intelligence are becoming more capable and efficient, and we’re integrating features in even more places across each of our operating systems,” Federighi said in a press release.

The features announced at WWDC will be available for newer iPhones, iPads, Macs, Apple Watches, and Apple Vision Pro in the fall. They are available for developer testing starting June 9 in the Apple Developer Program.

Developers can now hook into Apple’s on-device AI with an API

For developers, the Foundation Models framework will open a door between apps and the on-device model, circumventing any cloud API fees. The framework includes native support for the programming language Swift, which is made for working with Apple apps.

In addition, Xcode 26 will integrate ChatGPT. Developers will be able to use the generative AI assistant for coding, writing documentation, generating tests, iterating on code, and scanning for errors. The AI support can be accessed through the floating Coding Tools menu.

Starting with iOS 26, the App Intents tool will support visual intelligence, allowing developers to surface visual search results directly from within their apps.

“Apple has made a strategic decision in prioritizing its core principles of user privacy and security over rushing quick flashy AI features,” said Nabila Popal, senior research director at analyst firm IDC, in an email to eWeek.