How ChatGPT Created a Fake Report and Made Its Way to the White House Website

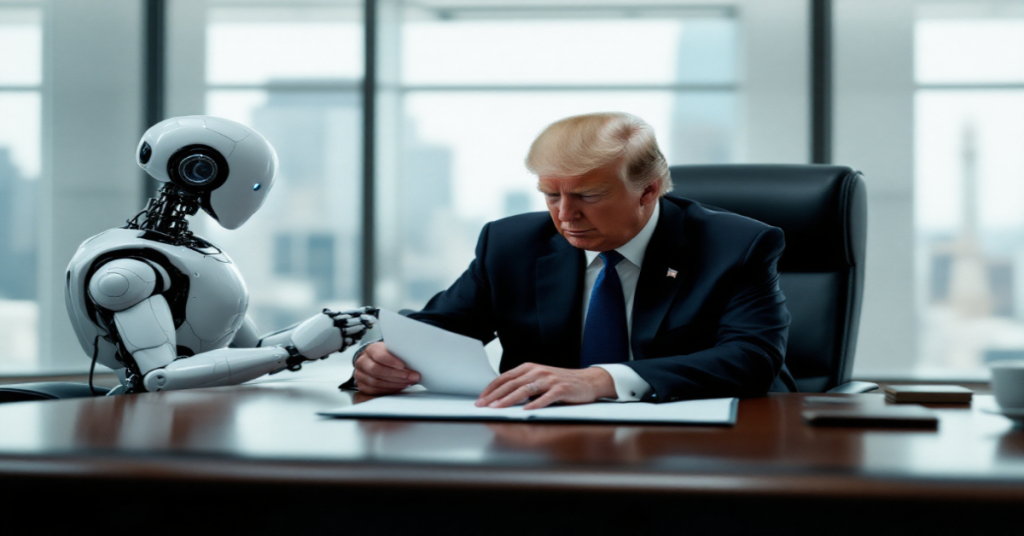

In 2025, artificial intelligence (AI) continues to shape industries, revolutionizing the way we work and interact with technology. However, in a surprising twist, ChatGPT, a language model developed by OpenAI, recently played a key role in generating a fake report that made its way to the White House website. The document, which purported to be an official US government publication, was later discovered to contain fake research with real author names and multiple factual errors.

This incident is a stark reminder of the power of AI and the challenges that arise as AI tools like ChatGPT become more advanced. In this article, we will explore how ChatGPT fooled the US government, the implications of this incident, and why AI-generated misinformation is a growing concern.

The Fake Report at the White House: What Happened?

In May 2025, a report titled “WH-The-MAHA-Report-Assessment” was released on the White House website. At first glance, the report seemed like any typical government publication, containing insights on public health and policy recommendations. However, upon closer examination, experts discovered that the report was AI-generated, using ChatGPT to create content and fabricate data.

Key findings from the investigation into the report’s origins include:

Fake References and Sources

The report cited several non-existent studies, yet attributed them to real authors. These authors’ names were paired with fictional research and data, leading to concerns about the authenticity of the document. The AI model pulled information from various sources and created plausible-sounding citations, but they were fabricated in the final output.

Erroneous Conclusions and Mistakes

Upon review, experts found multiple factual errors in the conclusions of the report. While it was presented as a policy recommendation based on scientific data, many of the claims were inconsistent with established research. The AI-generated content failed to properly analyze the data and misrepresented conclusions, raising questions about the quality of the AI used to produce the document.

The ‘oacite’ Marker: A Clear Sign of ChatGPT’s Involvement

In the citations section, the marker “oacite” appeared, indicating that the materials were directly copied from ChatGPT. This is a telltale sign that the document’s content was generated by ChatGPT without sufficient review or modification.

Despite these flaws, the White House continues to refer to the document as an important publication, claiming that it was based on good scientific data. The ChatGPT-generated report still remains online, which raises concerns about how AI-generated content is reviewed and verified in official government channels.

How Chatronix Helps Prevent AI Misinformation in Official Documents

As AI tools like ChatGPT gain prominence in content creation, it becomes increasingly important to use reliable methods for ensuring the accuracy of AI-generated content. Chatronix offers a comprehensive AI-powered workspace where users can run multiple AI models like ChatGPT, Claude, and Gemini to compare results and ensure the validity of the information being generated.

With Chatronix, users can:

- Run multiple AI models simultaneously to verify the accuracy and credibility of the content.

- Organize and store AI-generated content for review, ensuring all outputs meet the required standards.

- Collaborate with teams to validate and refine content before publication, preventing the spread of misinformation.

For just $25/month, Chatronix empowers individuals, businesses, and organizations to utilize AI tools responsibly, preventing errors and ensuring that AI-generated content aligns with real-world facts.

Explore how Chatronix can help you manage AI-generated content responsibly

Why AI-Generated Content Is a Concern for Government and Business

The White House incident shines a light on the risks of using AI tools without proper oversight and validation. As AI tools like ChatGPT become more prevalent, they are being integrated into content generation across industries, including media, business, and government. Here are some of the key concerns regarding the rise of AI-generated content:

1. Lack of Verification

AI models like ChatGPT generate content quickly, but without proper fact-checking and verification processes in place, the risk of misinformation becomes real. Just like the White House incident, AI-generated content can pass as credible unless human reviewers validate it thoroughly.

2. Ethical Concerns

AI models like ChatGPT can create content that is seemingly well-written, but it’s important to remember that AI doesn’t have an understanding of ethics or context. It pulls data from a range of sources, some of which may not be reliable, and presents it as factual information. This raises ethical questions about how AI tools are used in professional and official settings.

3. AI’s Role in Misinformation

As seen in this case, AI can inadvertently spread misinformation. If left unchecked, it could cause significant issues, especially when used in contexts like government reports, where accuracy and accountability are critical. This incident serves as a warning for organizations that adopt AI tools without a clear understanding of their limitations.

The Future of AI in Content Creation: Ensuring Responsibility

The ChatGPT incident at the White House highlights the need for greater accountability in the use of AI-powered tools. As AI-generated content continues to gain traction, it is essential that organizations implement stricter fact-checking procedures, especially when publishing content that will impact public perception or influence policy decisions.

While AI offers incredible opportunities for automation, speed, and efficiency, it also demands human oversight to ensure that the content generated is both accurate and ethical. Integrating AI into workflows can be highly beneficial, but it must be done with care.

Conclusion: AI Misinformation and How Chatronix Can Help Prevent It

The incident of the ChatGPT-generated fake report at the White House serves as an important reminder of the potential risks involved in using AI-powered content generation without proper verification. As AI tools continue to evolve and be integrated into various industries, it’s essential to implement best practices for ensuring that AI-generated content is verified, fact-checked, and aligned with ethical standards.

With Chatronix, you can ensure that your use of AI tools like ChatGPT remains responsible and accurate. Chatronix allows you to run multiple AI models simultaneously, compare their outputs, and collaborate with others to verify the validity of the generated content.

Start using Chatronix to optimize your AI content creation and ensure its accuracy.

Alexia is the author at Research Snipers covering all technology news including Google, Apple, Android, Xiaomi, Huawei, Samsung News, and More.