The search continues Thursday for a suspect in the fatal shooting of conservative activist and commentator Charlie Kirk at an event Wednesday at Utah Valley University. CBS News Confirmed has gathered information from officials and video and audio sources and has constructed this timeline of the assassination of the 31-year-old.

This timeline will be updated as more information emerges.

11:52 a.m. MT (1:52 p.m. ET): Shooter arrives near campus

The shooter arrived near the campus university at around 11:52 a.m., officials said at a news conference Thursday morning. The event was scheduled to begin in the UVU courtyard at noon. Videos from the scene show a large crowd gathered in the area.

Adam Bartholomew / @Lifeisdriving / Mainstreet Media Utah

Around 12:23 p.m. MT (2:23 p.m. ET): Kirk is shot during a campus event

A CBS News review of livestream footage and video metadata indicates Kirk was shot around 12:23 p.m. Footage from that moment shows the crowd scattering after the shot and Kirk being carried into a vehicle seconds after the shooting.

Screen grab from Instagram account of jeremyking_80

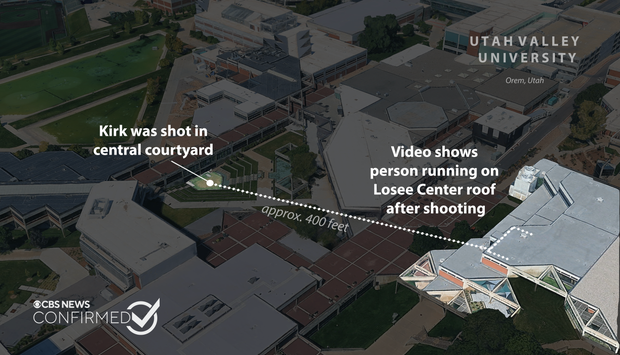

Additional video verified by CBS News shows a person running across the roof of the Losee Center in the moments after the shooting, about 400 feet from where Kirk had been sitting. Authorities have not confirmed whether that person is connected to the attack.

X account of Dustin Ivers

Earlier, a Utah Valley University spokesman and the Utah Department of Public Safety had said the shooting occurred around 12:20 p.m.

12:27 p.m. MT (2:27 p.m. ET): Dispatch audio described person on roof of building carrying long rifle

Dispatch audio reviewed by CBS News described a person on the roof of a nearby building wearing black clothing and carrying a long rifle. A later radio call said the individual was dressed in black tactical gear, including a helmet.

Beau Mason of the Utah Department of Public Safety said later, on Thursday, that investigators believe the suspect fired from a roof, then jumped from the building and fled into a nearby neighborhood. A UVU spokeswoman said Wednesday the university believed the shot was fired from the campus’ Losee Center, shown in the map below.

This map shows the location where Kirk was shot, in UVU’s central courtyard, which is surrounded by campus buildings.

CBS News Confirmed team

This map may be updated as more information becomes available.

Later, as law enforcement officers investigated, police tape was visible on the roof of the building where the person was seen around the time of the shooting.

Michael Ciaglo / Getty Images

2:37 p.m. MT (4:37 p.m. ET): Officers search area near campus

Video captured at 2:37 p.m. shows officers searching an area around a quarter mile north of the campus. CBS News confirmed the time using the video’s metadata.

X account of phil_lyman

2:40 p.m. MT (4:40 p.m. ET): Trump announces Kirk’s death

President Trump posted on Truth Social that Kirk had died, writing, “The Great, and even Legendary, Charlie Kirk, is dead. No one understood or had the Heart of the Youth in the United States of America better than Charlie. He was loved and admired by ALL, especially me, and now, he is no longer with us.”

Emma Li, Layla Ferris, Laura Doan, Maddie Kornfeld and Julia Ingram contributed to this report.