In the wake of reports that dozens of teen boys have died by suicide since 2021 after being victimized by blackmailers, Apple is relying on its Communication Safety features to help protect potential victims — but some critics say it isn’t enough.

The number of cases of blackmailed teens being preyed on by scammers who either tricked the victims into providing explicit images — or who simply made fake images utilizing AI for blackmailing purposes — is rising. The outcomes are often tragic.

A new report from the Wall Street Journal has profiled a number of young victims that ultimately ended their lives rather than face the humiliation of real or faked explicit images of themselves becoming public. This content is known as Child Sexual Abuse Material, or CSAM.

The profile mentions the teens’ implicit trust in iPhone messaging as part of the problem.

The WSJ article includes a story about Shannon Heacock, a high-school cheer team coach, and her 16-year-old son, Elijah. After texting his mom about the next day’s activities as usual, Elijah went to bed.

Hours later, Heacock was awakened by her daughter. Elijah had been found in the laundry room, bleeding from a self-inflicted gunshot wound. He died the next morning.

He and two other teens profiled in the article were victimized by criminals who connect to teens on social networks — often posing as teenage girls. After a period of social chat to gain trust, the blackmailer sends fake explicit photos of the “girl” they are posing as, asking for similar images from the victim in return.

They then blackmail the victims, demanding payments in the form of gift cards, wire transfers, and even cryptocurrency, to not share the images publicly.

Payments are demanded immediately, putting the victim under time pressure to pay the blackmail — which they often cannot do — or face family, school, and public humiliation.

The teens, faced with what they consider impossible alternatives, may go as far as committing suicide to escape the pressure of the blackmail. The scam is referred to as “sextortion,” and has claimed or ruined many young lives.

CSAM detection and Apple’s current efforts

Sextortion cases have skyrocketed with the rise of social networks. The US-based National Center for Missing and Exploited Children released a study last year showing a 2.5 times increase in reported sextortion cases between 2022 and 2023.

The NCMEC reported 10,731 sextortion cases in 2022 and 26,718 reports in 2023, mostly victimizing young men. The group advises parents of teens to preemptively discuss the possibility of online blackmail threats to minimize the effect of the blackmailer’s threats.

When the problem first became widespread in 2021, Apple announced a set of tools it would employ to detect possible CSAM images on the accounts of underage users.

This included features in iMessage, Siri, and Search, utilizing a mechanism that scanned iCloud Photo hashes against a database of known CSAM images.

Also included was a mechanism for users to flag inappropriate messages or images, reporting the sender to Apple. The scanning of images only applied to iCloud Photos users, not to images that were stored locally.

After a massive outcry from privacy advocates, child safety groups, and governments, Apple dropped its plans for scanning iCloud photos against the CSAM database. Instead, it now relies on its Communication Safety features and adult control of child accounts to protect its users.

“Scanning every user’s privately stored iCloud data would create new threat vectors for data thieves to find and exploit,” said Erik Neuenschwander, Apple’s director of user privacy and child safety. “It would also inject the potential for a slippery slope of unintended consequences.”

“Scanning for one type of content, for instance, opens the door for bulk surveillance — and could create a desire to search other encrypted messaging systems across content types,” he continued. Some countries, including the US, have put pressure on Apple to allow such surveillance.

“We decided to not proceed with the proposal for a hybrid client-server approach to CSAM detection for iCloud Photos from a few years ago,” Neuenschwander added. “We concluded it was not practically possible to implement without ultimately imperiling the security and privacy of our users.”

Apple now uses Communication Safety to help protect child users.

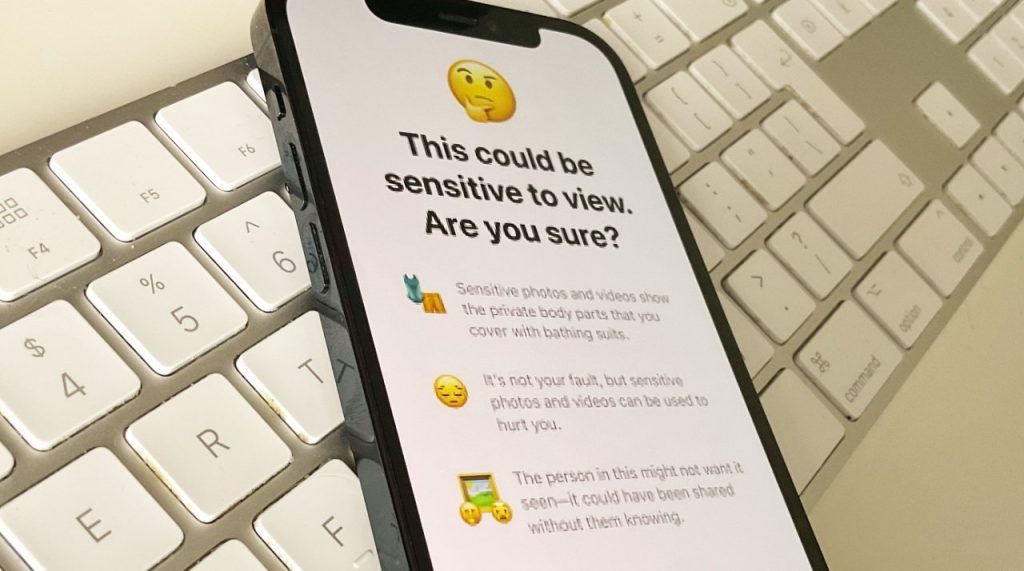

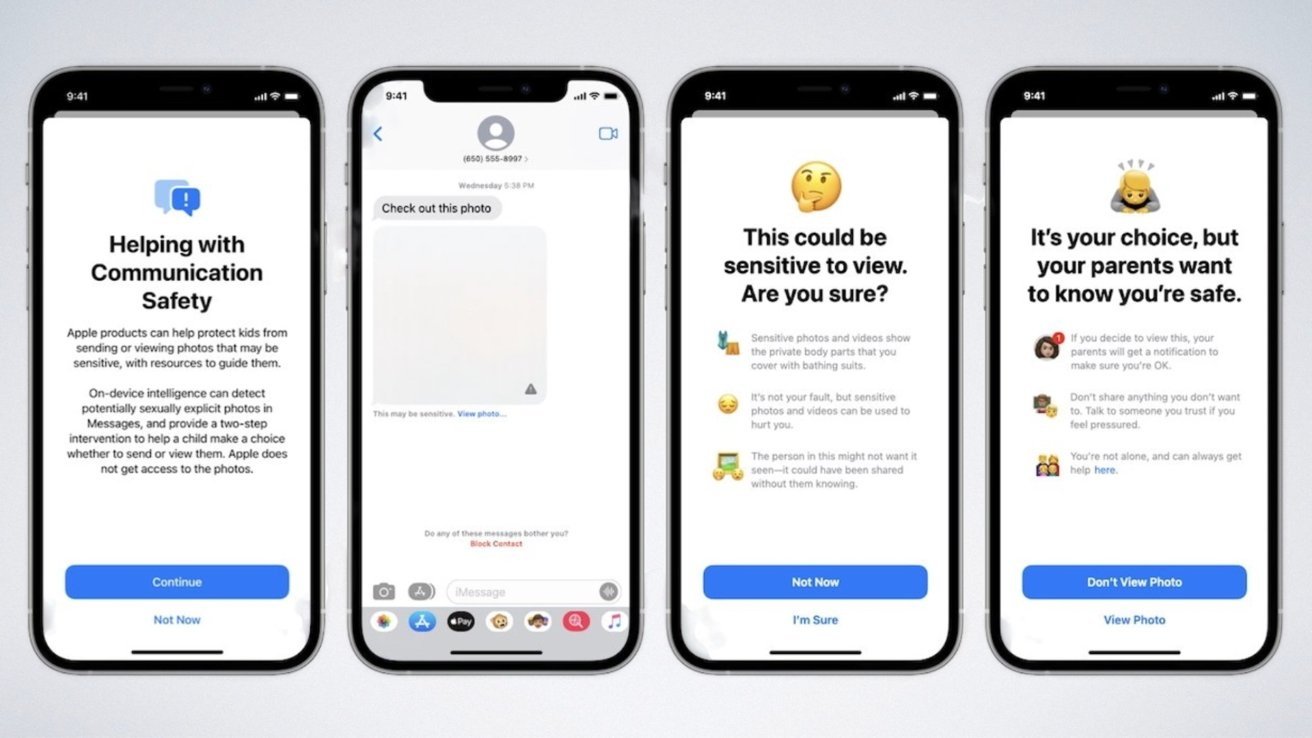

Instead, Apple has opted to strengthen parental controls of child accounts, which can be set up for kids and teens under 18. This included allowing parents to turn on a feature that detects nudity in pictures being sent and received in child accounts, and blurs the image automatically before the child ever sees it.

If a child account user receives or intends to send an image with nudity in it, the child will be given helpful resources. They will be reassured that it’s okay if they don’t want to view the image.

The child will also be given the option to message someone they trust for help.

Parents will not immediately be notified if the system detects that a message contains nudity, because of Apple’s concerns that premature parental notification could present a risk for a child, including the threat of physical violence or abuse.

However, the child will be warned that parents will be notified if they choose to open a masked image that contains nudity, or send an image that contains nudity after the warnings.

While some groups have called for further measures from Apple and other technology firms, the company uniquely tries to strike a balance between user privacy and harm prevention. Apple continues to research ways to improve Communication Safety.